Just putting the finishing touches to my dissertation in preperation for a final revision.

over 35 pages

over 10,000 words

looking forward to a five minute break.

Monday, 18 May 2009

Tuesday, 31 March 2009

Reset

If you think you need to reset something, then it's highly likely that the library has a reset call in it.

DiracReset(true, m_pDiracSystem);

Doh!

DiracReset(true, m_pDiracSystem);

Doh!

Sunday, 29 March 2009

Cue The Music

I ran into a slight hiccup earlier in the week when I realised one of two possible problems; either my timing calculation algorithm produced unavoidable rounding errors or Reason 3 was not writing files out with exactly the right amount of PCM samples in them. This resulted in any track that was the full length of the sequence going out of time with the click track, which is not good.

No matter which of these reasons were causing this the solution seemed the same - Add some silent PCM samples at the end of the audio sample to make up the 0.01 percent (guesstimate) missing, or time stretch the sample by the small amount to account for the missing samples. The second solution was my first choice as the first could introduce some pops/clicks in the sound if the discrepancy was large, although if the discrepancy is too large then that will cause other issues of timing. As the DIRAC time stretching library had already been integrated it was just a case of finding the percent of missing samples and process the sound to the new length. This worked a charm and now my sequences can loop indefinitely.

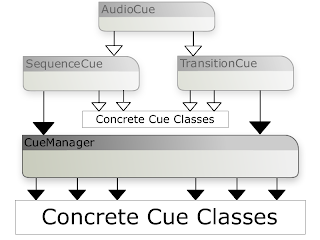

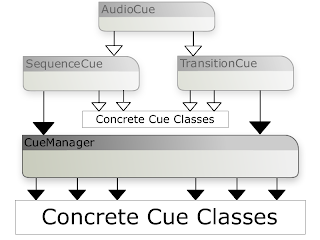

With the timing and the layering systems all functioning correctly it was now time to move on to the audio cues. In general there are two different roles that a cue can perform: alter the playback of a given sequence; provide a transition between sequences. To help keep track of the various different cues I use these two basic roles as separators. A cue manager is used to create each instance of a cue so that a record of it can be stored, to allow access to the cue at a later date. Here is a diagram of how the cue creation process is structured.

In this structure the cue manager uses a template Factory Method to instantiate cues of varying types, ensuring that the data type at least inherits the AudioCue class by only returning pointers to objects of that data type. For recording purposes two STL maps are used to store pointers to objects that are of type "SequenceCue" or "TransitionCue". As stated before, these types define the two distinguishing roles of any cue.

The foundation of these cues is based on the virtual method "Perform". Every concrete class must override this method in order to allow the cue to manipulate a sequence or set of sequences in some way. Structure wise this design is similar to the Command pattern, in the way that the core of the design is based around an "execute" method. Now when calling a cue the application need not know what concrete type of cue it is calling, only that it is either a SequenceCue or a TransitionCue - the overridden "Perform" method handles all the intricate logic related to the cue.

There can be three methods of triggering a cue: on a beat; on a bar; instantly. On a beat or bar could signify a specific number in the sequence or just the next beat or bar that will be played. This is where the click track proves useful here, as the callback from it instantly (instantly when we call system::update ... those darn synchronous callbacks) tells us when we've hit a beat or bar, and from that we can start an activated cue.

The design structure of the cue system keeps it highly modular, allowing for easy addition of new cues that could incorporate features such as standard DSP effects or additional sounds to be layered in the sequence.

No matter which of these reasons were causing this the solution seemed the same - Add some silent PCM samples at the end of the audio sample to make up the 0.01 percent (guesstimate) missing, or time stretch the sample by the small amount to account for the missing samples. The second solution was my first choice as the first could introduce some pops/clicks in the sound if the discrepancy was large, although if the discrepancy is too large then that will cause other issues of timing. As the DIRAC time stretching library had already been integrated it was just a case of finding the percent of missing samples and process the sound to the new length. This worked a charm and now my sequences can loop indefinitely.

With the timing and the layering systems all functioning correctly it was now time to move on to the audio cues. In general there are two different roles that a cue can perform: alter the playback of a given sequence; provide a transition between sequences. To help keep track of the various different cues I use these two basic roles as separators. A cue manager is used to create each instance of a cue so that a record of it can be stored, to allow access to the cue at a later date. Here is a diagram of how the cue creation process is structured.

In this structure the cue manager uses a template Factory Method to instantiate cues of varying types, ensuring that the data type at least inherits the AudioCue class by only returning pointers to objects of that data type. For recording purposes two STL maps are used to store pointers to objects that are of type "SequenceCue" or "TransitionCue". As stated before, these types define the two distinguishing roles of any cue.

The foundation of these cues is based on the virtual method "Perform". Every concrete class must override this method in order to allow the cue to manipulate a sequence or set of sequences in some way. Structure wise this design is similar to the Command pattern, in the way that the core of the design is based around an "execute" method. Now when calling a cue the application need not know what concrete type of cue it is calling, only that it is either a SequenceCue or a TransitionCue - the overridden "Perform" method handles all the intricate logic related to the cue.

There can be three methods of triggering a cue: on a beat; on a bar; instantly. On a beat or bar could signify a specific number in the sequence or just the next beat or bar that will be played. This is where the click track proves useful here, as the callback from it instantly (instantly when we call system::update ... those darn synchronous callbacks) tells us when we've hit a beat or bar, and from that we can start an activated cue.

The design structure of the cue system keeps it highly modular, allowing for easy addition of new cues that could incorporate features such as standard DSP effects or additional sounds to be layered in the sequence.

Friday, 20 March 2009

Not Strictly Asynchronous

In order to keep a low overhead for using FMODex the developers consciously decided to not make it thread safe. While this is nice if you want to squeeze the most out of your audio code, the design of the API has left me with a slight issue relating to my click track implementation.

Callbacks are easy to set up in FMOD, but they do not work how you expect them to. In order to trigger a channel callback you must first call the FMOD system method "update". In fact, to update any of the 'asynchronous' features of FMOD you require to call system::update(), and this method must be called in the same thread that all other FMOD commands have been called in because of the non-thread-safe nature of the API.

For a simple while loop:

while (true)

{

FmodSystem::Update();

}

Everything runs fine, and to a certain extent (depending on how time sensitive you are) the method of using a click track would be enough to keep everything in time without the need for sample accurate sound stitching. However, Once an element of delay is introduced - Sleep(30) - timing discrepancies begin occurring with the triggering of samples. With a delay of Sleep(100) the timing is really poor; with a delay of Sleep(1000) it's a mess. This is fairly obvious when you think about it as the callbacks would only be triggered every 1000 milliseconds or so, and when a beat generally occurs approximately every 0.3 seconds in a 4/4, 120 bpm sequence, you can see where the delay is coming from.

I am going to try handling the system::update() in its own thread to see if this can keep the callback timings consistent, but I will need to be careful not to access sensitive FMOD methods from this new thread.

Callbacks are easy to set up in FMOD, but they do not work how you expect them to. In order to trigger a channel callback you must first call the FMOD system method "update". In fact, to update any of the 'asynchronous' features of FMOD you require to call system::update(), and this method must be called in the same thread that all other FMOD commands have been called in because of the non-thread-safe nature of the API.

For a simple while loop:

while (true)

{

FmodSystem::Update();

}

Everything runs fine, and to a certain extent (depending on how time sensitive you are) the method of using a click track would be enough to keep everything in time without the need for sample accurate sound stitching. However, Once an element of delay is introduced - Sleep(30) - timing discrepancies begin occurring with the triggering of samples. With a delay of Sleep(100) the timing is really poor; with a delay of Sleep(1000) it's a mess. This is fairly obvious when you think about it as the callbacks would only be triggered every 1000 milliseconds or so, and when a beat generally occurs approximately every 0.3 seconds in a 4/4, 120 bpm sequence, you can see where the delay is coming from.

I am going to try handling the system::update() in its own thread to see if this can keep the callback timings consistent, but I will need to be careful not to access sensitive FMOD methods from this new thread.

Monday, 16 March 2009

Old Ideas Return

When breaking up a piece of music into various layers that play independently a major concern is how to keep these layers in time with each other. Wwise achieves this by making sure that each layer is of the same sample length. This is also how I got round the issue during Dare To Be Digital. The whole point of this project, however, is to allow for layers to be of varying lengths. So how can the synchronisation of these layers be achieved?

The first method I looked into simply involved counting the beats. If you have a sequence that you know the tempo and time signature you can use that to work out how long, in milliseconds, a single beat should be. A timer can then be used to count the milliseconds between beats.

While in theory this is a "sound" idea (sorry, but I had to get that pun in eventually) issues can occur with synchronising the start of the timer with the start of the sequence. A separate thread created by the FMOD api handles playback of the sounds meaning that the timer is being calculated outwith the sound playback. With the time-critical nature of audio, and particularly music, this method is inevitably too unpredictable to confidently use, as the timer will always be separate from the source.

After realising the pitfalls of using a timer I then returned to my original idea of using an audio sample as a "click track". The click track was based on a sample containing a single bar of audio that had markers placed on the beats. During playback of this sample when the playhead passed one of the markers a callback would be triggered indicating that we've hit a beat. With this method the timing is kept in the same thread as the playback of the audio, so timing discrepancies are reduced.

This method was used in the Dare To Be Digital game, Origamee, to synchronise the audio cues with the beats of the music. However, it didn't work properly as it suffered from the delay of looping static sounds, so the cues would go out of time. Luckily as the tempo for the game music was fast it was hard to spot the timing issues with the cues, and because the music layers were the same length the music itself wouldn't go out of time. Now that I can implement sample accurate stitching this can be used to resurrect the click track, as it overcomes the issue with the loop delay.

The first method I looked into simply involved counting the beats. If you have a sequence that you know the tempo and time signature you can use that to work out how long, in milliseconds, a single beat should be. A timer can then be used to count the milliseconds between beats.

While in theory this is a "sound" idea (sorry, but I had to get that pun in eventually) issues can occur with synchronising the start of the timer with the start of the sequence. A separate thread created by the FMOD api handles playback of the sounds meaning that the timer is being calculated outwith the sound playback. With the time-critical nature of audio, and particularly music, this method is inevitably too unpredictable to confidently use, as the timer will always be separate from the source.

After realising the pitfalls of using a timer I then returned to my original idea of using an audio sample as a "click track". The click track was based on a sample containing a single bar of audio that had markers placed on the beats. During playback of this sample when the playhead passed one of the markers a callback would be triggered indicating that we've hit a beat. With this method the timing is kept in the same thread as the playback of the audio, so timing discrepancies are reduced.

This method was used in the Dare To Be Digital game, Origamee, to synchronise the audio cues with the beats of the music. However, it didn't work properly as it suffered from the delay of looping static sounds, so the cues would go out of time. Luckily as the tempo for the game music was fast it was hard to spot the timing issues with the cues, and because the music layers were the same length the music itself wouldn't go out of time. Now that I can implement sample accurate stitching this can be used to resurrect the click track, as it overcomes the issue with the loop delay.

Friday, 30 January 2009

DIRAC Time Stretching & Pitch Shifting

Whilst the majority of the recent work has involved a lot of planing and structuring of the tool application and its code base, I have also spent some time investigating the time stretching technique I intend to implement. Over the past few days it has become clear that I am unlikely to succeed in producing a time stretching algorithm capable of the tasks I would deligate to it. There are so many existing algorithms out there, each fairly complicated, that produce results of varying quality depending on the audio signal fed into them. For example the Rabiner and Schafer method does not work so well for polyphonic sounds but the phase vocoder method can introduce an audible after-effect known as "phase smearing".

However, I have come across a C/C++ library specially dedicated to pitch shifting and time stretching known as DIRAC. It comes in various flavours but their completly free DIRAC LE version interested me the most. This still provides the quality that you get with the studio and pro levels but puts some limits on things like supported sample rates and number of channels which don't really affect me too much.

DIRAC was developed by Stephan Bernsee, the founder of Prosoniq, and it can be seen in many professional level products such as Steinberg's Wavelab and Nuendo. It is an incredibly small and simple library containing a total of 7 functions and 3 enumerations, however the quality is astounding for it being a free product ( let's pretend we didn't see the 9870 EURO price tag for the full version ).

After a couple of days of tinkering I've managed to take a sound that's been loaded into FMOD and stretch/squash it using DIRAC. My test program is still a bit flaky though as I'm still not 100% sure how the sound buffers work. Doesn't help when FMOD works in bytes and DIRAC works in samples, but I'll get there.

However, I have come across a C/C++ library specially dedicated to pitch shifting and time stretching known as DIRAC. It comes in various flavours but their completly free DIRAC LE version interested me the most. This still provides the quality that you get with the studio and pro levels but puts some limits on things like supported sample rates and number of channels which don't really affect me too much.

DIRAC was developed by Stephan Bernsee, the founder of Prosoniq, and it can be seen in many professional level products such as Steinberg's Wavelab and Nuendo. It is an incredibly small and simple library containing a total of 7 functions and 3 enumerations, however the quality is astounding for it being a free product ( let's pretend we didn't see the 9870 EURO price tag for the full version ).

After a couple of days of tinkering I've managed to take a sound that's been loaded into FMOD and stretch/squash it using DIRAC. My test program is still a bit flaky though as I'm still not 100% sure how the sound buffers work. Doesn't help when FMOD works in bytes and DIRAC works in samples, but I'll get there.

Friday, 23 January 2009

Updating ...

It has been a while since the last post, partly due to needing a little time to relax but primarily because of the updates needed to be made to my team's Dare To Be Digital 2008 entry - a winning entry if you can believe that. Extra work was needed to be done to it in preparation for the BAFTAs in March, however, now that is out the way I can get down to business again with this project.

With the final submission of my project proposal I narrowed down two main features to focus on that I felt were most neglected in current game audio tools. These features are time stretching and sample accurate looping.

Time Stretching

This is the method of lengthening or shortening an audio sample while preserving its pitch. During my research I have not found a single game specific application that supports this feature meaning that if any samples were to be layered together as part of one track they would have to be edited in another package first before they could be used together if their tempo's differed. In a review of the tool Wwise, Michael Henein stated that he would like to see time stretching implemented in these kind of tools to allow for more automated syncing with musical cues. I agree with his statement as tools are supposed to aid the developer - it's no use if you have one tool but are quite restricted in how you use it unless you have access to others.

In this project the time stretching will be implemented in a similar fashion to how Sony Acid has used it. With Acid, when you load in a sample you can make it fit with the rest of the project your working on by making its tempo match the projects tempo by either stretching or shortening it. This feature in a game specific tool would allow the audio designer/composer to just dump any suitable samples into their application without having to worry about their pacing as the tool will be able to make them all fit together.

Time stretching can have some adverse affects on certain types and lengths of samples such as the creation of artifacts ( clicks and pops ) and just making it sound unplesant. This is where the judgment of the user will be required to assess if more drastic measures are needed such as recording a new sample or changing the layout of the track.

Sample Accurate Looping

Unfortunatly with the audio APIs I have looked into I discovered ( through experience ) that when you set a sound to loop there is a slight delay from when it ends to when it loops round to the start. While this delay is inaudable it does pose an issue where multi-layered, different length sounds are concerned. The reasoning behind this was explained this previous post.

Why not just use samples that are the same length when layering them I hear you ask? Well, if you have ever taken a look at a complicated musical composition in any sequencer based program ( Cubase, Logic, Reason, Acid etc.) you'll notice that little variations, short phrases and contained loops can be found all over the place, seperated by blank spaces. If you were to create a single loop with samples of the same length then each sample would have to be as long as the longest sample. Not only would this take up huge amounts of unnecessary memory/CPU overhead in playing silent passages it also restricts how dynamic the composition can be. Individual phrases or loops could never be randomly played at any time unless an extra layer was added just for those bits - a layer that would again have to be the same length as the longest sample.

With using sample accurate looping, sections that have a lot of silence would simply not be played, reducing memory footprint as well as CPU overhead. Compositions could also be made more dynamic by allowing the composer to define sections that can have variations played in them, of which a certain variation could be picked in real time depending on the game state.

With the final submission of my project proposal I narrowed down two main features to focus on that I felt were most neglected in current game audio tools. These features are time stretching and sample accurate looping.

Time Stretching

This is the method of lengthening or shortening an audio sample while preserving its pitch. During my research I have not found a single game specific application that supports this feature meaning that if any samples were to be layered together as part of one track they would have to be edited in another package first before they could be used together if their tempo's differed. In a review of the tool Wwise, Michael Henein stated that he would like to see time stretching implemented in these kind of tools to allow for more automated syncing with musical cues. I agree with his statement as tools are supposed to aid the developer - it's no use if you have one tool but are quite restricted in how you use it unless you have access to others.

In this project the time stretching will be implemented in a similar fashion to how Sony Acid has used it. With Acid, when you load in a sample you can make it fit with the rest of the project your working on by making its tempo match the projects tempo by either stretching or shortening it. This feature in a game specific tool would allow the audio designer/composer to just dump any suitable samples into their application without having to worry about their pacing as the tool will be able to make them all fit together.

Time stretching can have some adverse affects on certain types and lengths of samples such as the creation of artifacts ( clicks and pops ) and just making it sound unplesant. This is where the judgment of the user will be required to assess if more drastic measures are needed such as recording a new sample or changing the layout of the track.

Sample Accurate Looping

Unfortunatly with the audio APIs I have looked into I discovered ( through experience ) that when you set a sound to loop there is a slight delay from when it ends to when it loops round to the start. While this delay is inaudable it does pose an issue where multi-layered, different length sounds are concerned. The reasoning behind this was explained this previous post.

Why not just use samples that are the same length when layering them I hear you ask? Well, if you have ever taken a look at a complicated musical composition in any sequencer based program ( Cubase, Logic, Reason, Acid etc.) you'll notice that little variations, short phrases and contained loops can be found all over the place, seperated by blank spaces. If you were to create a single loop with samples of the same length then each sample would have to be as long as the longest sample. Not only would this take up huge amounts of unnecessary memory/CPU overhead in playing silent passages it also restricts how dynamic the composition can be. Individual phrases or loops could never be randomly played at any time unless an extra layer was added just for those bits - a layer that would again have to be the same length as the longest sample.

With using sample accurate looping, sections that have a lot of silence would simply not be played, reducing memory footprint as well as CPU overhead. Compositions could also be made more dynamic by allowing the composer to define sections that can have variations played in them, of which a certain variation could be picked in real time depending on the game state.

Subscribe to:

Posts (Atom)